ALPINE: Autoregressive Learning for Planning in Networks

Large Language Models (LLMs) such as ChatGPT have attracted a lot of attention since they can perform a wide range of activities, including language processing, knowledge extraction, reasoning, planning, coding, and tool use. These abilities have sparked research into creating even more sophisticated AI models and hint at the possibility of Artificial General Intelligence (AGI).

The Transformer neural network architecture, on which LLMs are based, uses autoregressive learning to anticipate the word that will appear next in a series. This architecture’s success in carrying out a wide range of intelligent activities raises the fundamental question of why predicting the next word in a sequence leads to such high levels of intelligence.

Researchers have been looking at a variety of topics to have a deeper understanding of the power of LLMs. In particular, the planning ability of LLMs has been studied in a recent work, which is an important part of human intelligence that is engaged in tasks such as project organization, travel planning, and mathematical theorem proof. Researchers want to bridge the gap between basic next-word prediction and more sophisticated intelligent behaviors by comprehending how LLMs perform planning tasks.

In a recent research, a team of researchers has presented the findings of the Project ALPINE which stands for “Autoregressive Learning for Planning In NEtworks.” The research dives into how the autoregressive learning mechanisms of Transformer-based language models enable the development of planning capabilities. The team’s goal is to identify any possible shortcomings in the planning capabilities of these models.

The team has defined planning as a network path-finding task to explore this. Creating a legitimate path from a given source node to a selected target node is the objective in this case. The results have demonstrated that Transformers, by embedding adjacency and reachability matrices within their weights, are capable of path-finding tasks.

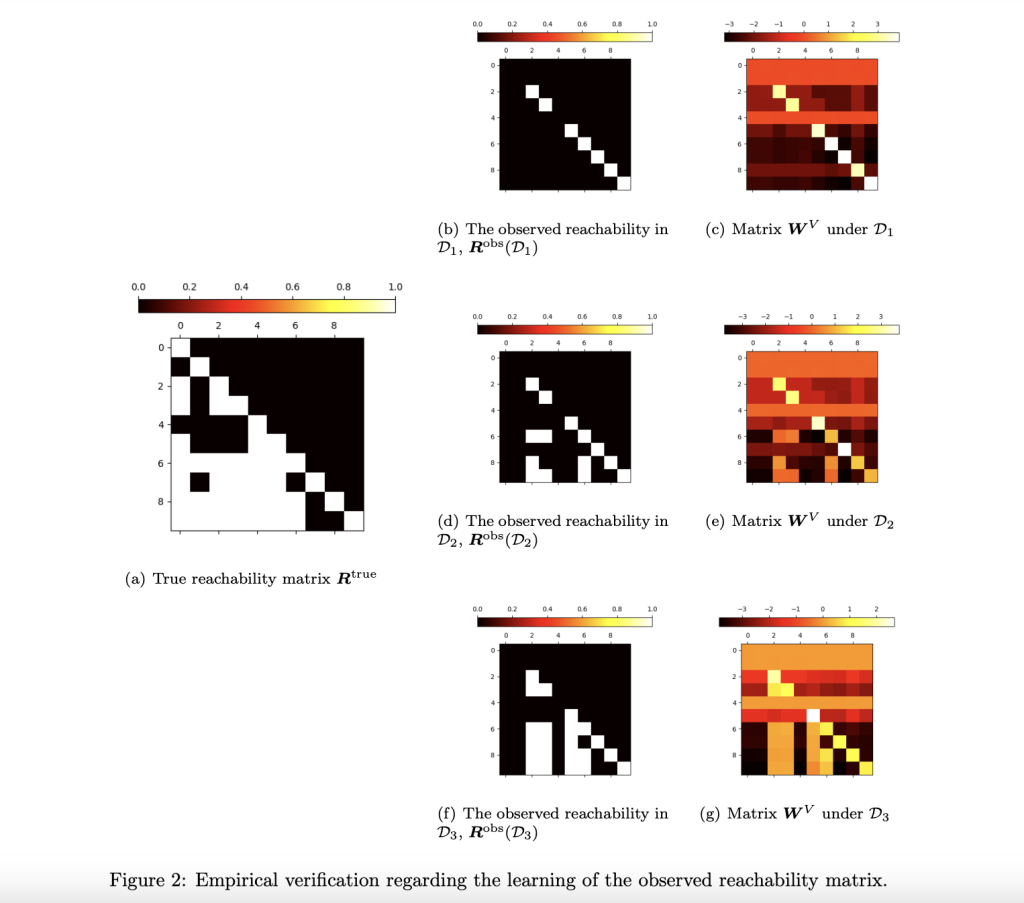

The team has theoretically investigated Transformers’ gradient-based learning dynamics. According to this, Transformers are capable of learning both a condensed version of the reachability matrix and the adjacency matrix. Experiments were conducted to validate these theoretical ideas, demonstrating that Transformers may learn both an incomplete reachability matrix and an adjacency matrix. The team also used Blocksworld, a real-world planning benchmark, to apply this methodology. The outcomes supported the primary conclusions, indicating the applicability of the methodology.

The study has highlighted a potential drawback of Transformers in path-finding, namely their inability to recognize reachability links through transitivity. This implies that they wouldn’t work in situations where creating a complete path requires path concatenation, i.e., transformers might not be able to correctly produce the right path if the path involves an awareness of connections that span several intermediate nodes.

The team has summarized their primary contributions as follows,

An analysis of Transformers’ path-planning tasks using autoregressive learning in theory has been conducted.

Transformers’ capacity to extract adjacency and partial reachability information and produce legitimate pathways has been empirically validated.

The Transformers’ inability to fully understand transitive reachability interactions has been highlighted.

In conclusion, this research sheds light on the fundamental workings of autoregressive learning, which facilitates network design. This study expands on the knowledge of Transformer models’ general planning capacities and can help in the creation of more sophisticated AI systems that can handle challenging planning jobs across a range of industries.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.