Google AI Releases Two Updated Production-Ready Gemini Models: Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002 with Enhanced Performance and Lower Costs

Google has just rolled out an exciting update to its Gemini models by releasing Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, offering production-ready versions, reduced pricing, and increased rate limits. The enhanced models deliver improved performance across a wide array of tasks, marking a significant step in making AI more accessible and practical for businesses and developers alike.

Key Enhancements

1. Significant Benchmark Improvements

The updated Gemini models demonstrate impressive gains in several key benchmarks:

MMLU-Pro Performance: The models have achieved a 7% increase on the MMLU-Pro benchmark, a more challenging variant of the popular MMLU benchmark, known for testing broad knowledge across various academic subjects.

Mathematical Problem-Solving: On math-heavy benchmarks, including MATH and HiddenMath (an internal holdout set of competition math problems), Gemini models have shown a 20% improvement, making them more capable in tackling complex mathematical challenges.

Vision and Code: For vision and code-based tasks, the models exhibit improvements of 2-7%, with gains in visual understanding tasks as well as Python code generation benchmarks. This performance boost makes Gemini a strong contender for use cases ranging from image analysis to programming assistance.

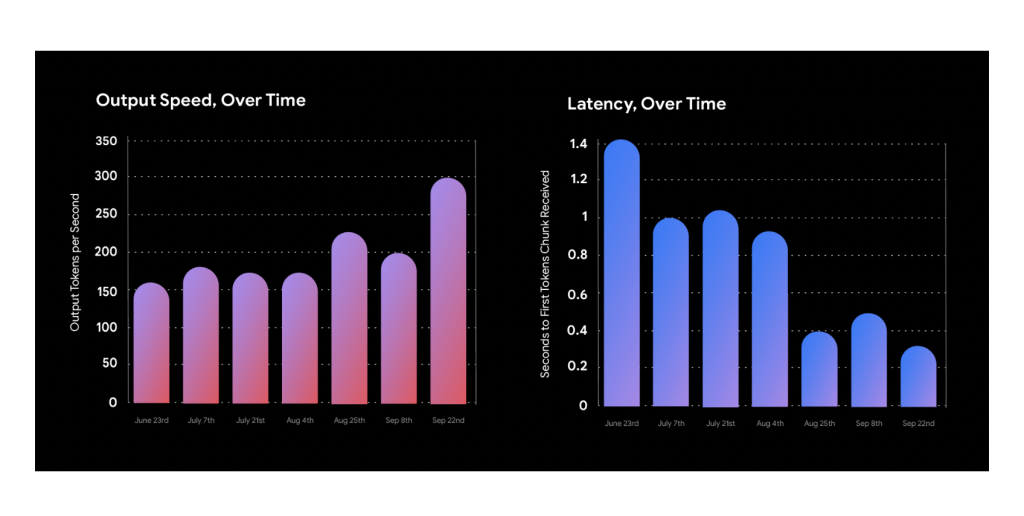

2. Production-Ready with Enhanced Scalability

The Gemini models are now production-ready, offering greater scalability for businesses looking to deploy AI models in real-world scenarios. This means that the models have been optimized for both large-scale applications and lower-latency environments, allowing developers to confidently build AI-powered products that meet the performance and reliability needs of production environments.

3. 15% Price Reduction

One of the most crucial updates is the 15% reduction in pricing for using Gemini models. This decrease in cost lowers the barrier for entry, making it more feasible for small and medium-sized enterprises to incorporate advanced AI into their operations. Whether it’s automating processes, improving customer service, or enabling more intelligent product features, this reduction ensures AI is more accessible than ever.

4. Increased Rate Limits

Google has also increased rate limits, allowing developers to process more requests per second. This is especially beneficial for applications requiring real-time processing, such as recommendation engines, real-time translations, or interactive customer service bots. By enhancing the rate limits, Gemini models can handle more extensive workloads without compromising on speed or efficiency.

Key Benefits for Developers and Businesses

1. Improved Multimodal Performance

With advances across various benchmarks, the Gemini models now offer enhanced performance in multimodal tasks, which combine text, vision, and code. This positions the models as versatile solutions for developers building complex applications, including AI assistants, smart search tools, and content generation systems.

2. Better Efficiency in Real-Time Applications

The combination of increased rate limits and reduced latency opens new opportunities for developers working on real-time applications. The Gemini models are more capable of handling large-scale user interactions in real time, improving the overall user experience in AI-powered applications like chatbots, virtual assistants, and live support systems.

3. Cost-Effective AI at Scale

By reducing the pricing structure, Google makes it easier for startups and small businesses to leverage state-of-the-art AI capabilities. This reduction aligns with the broader industry trend of democratizing AI, enabling more companies to innovate without significant overhead costs.

Conclusion

Google’s updated Gemini models deliver across the board—offering a significant performance boost, cost-efficiency, and enhanced scalability for production-ready environments. The improvements in benchmark performance, particularly in challenging areas like math and code generation, solidify Gemini as a top-tier choice for developers aiming to push the limits of AI capabilities. These updates ensure that businesses, from startups to large enterprises, can deploy high-performance AI models that are both affordable and robust.

As AI continues to evolve, the new Gemini models set a strong foundation for future development, driving innovation across industries while lowering the entry barriers for widespread AI adoption.

Check out the Details. Developers can access these latest models for free via Google AI Studio and the Gemini API. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

⏩ ⏩ FREE AI WEBINAR: ‘SAM 2 for Video: How to Fine-tune On Your Data’ (Wed, Sep 25, 4:00 AM – 4:45 AM EST)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.