MIT Researchers Released a Robust AI Governance Tool to Define, Audit, and Manage AI Risks

AI-related risks concern policymakers, researchers, and the general public. Although substantial research has identified and categorized these risks, a unified framework is needed to be consistent with terminology and clarity. This lack of standardization makes it challenging for organizations to create thorough risk mitigation strategies and for policymakers to enforce effective regulations. The variation in how AI risks are classified hinders the ability to integrate research, assess threats, and establish a cohesive understanding necessary for robust AI governance and regulation.

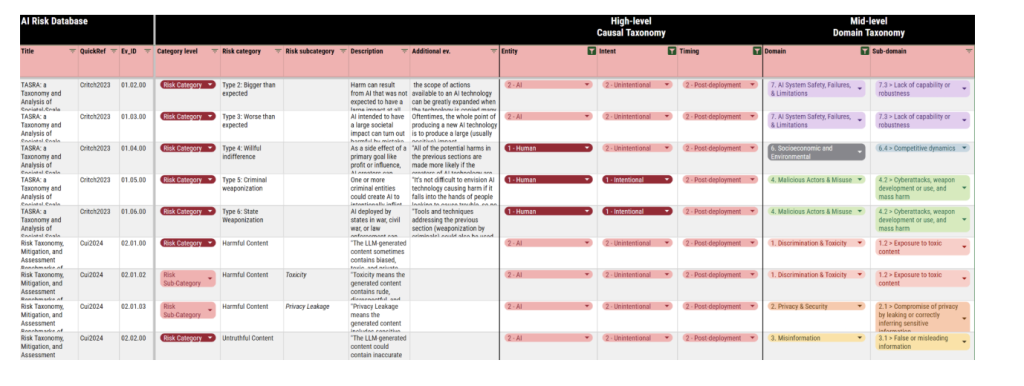

Researchers from MIT and the University of Queensland have developed an AI Risk Repository to address the need for a unified framework for AI risks. This repository compiles 777 risks from 43 taxonomies into an accessible, adaptable, and updatable online database. The repository is organized into two taxonomies: a high-level Causal Taxonomy that classifies risks by their causes and a mid-level Domain Taxonomy that categorizes risks into seven main domains and 23 subdomains. This resource offers a comprehensive, coordinated, and evolving framework to understand better and manage the various risks posed by AI systems.

A comprehensive search was conducted to classify AI risks, including systematic literature reviews, citation tracking, and expert consultations, resulting in an AI Risk Database. Two taxonomies were developed: the Causal Taxonomy, categorizing risks by responsible entity, intent, and timing, and the Domain Taxonomy, classifying risks into specific domains. The definition of AI risk, aligned with the Society for Risk Analysis, encompasses potential negative outcomes from AI development or deployment. The search strategy involved a systematic and expert-assisted literature review, followed by data extraction and synthesis, using a best-fit framework approach to refine and capture all identified risks.

The study identifies AI risk frameworks by searching academic databases, consulting experts, and tracking forward and backward citations. This process led to the creating of an AI Risk Database containing 777 risks from 43 documents. The risks were categorized using a “best-fit framework synthesis” method, resulting in two taxonomies: a Causal Taxonomy, which classifies risks by entity, intent, and timing, and a Domain Taxonomy, which groups risks into seven key areas. These taxonomies can filter and analyze specific AI risks, aiding policymakers, auditors, academics, and industry professionals.

The study conducted a comprehensive review, analyzing 17,288 articles, and selected 43 relevant documents focused on AI risks. The documents included peer-reviewed articles, preprints, conference papers, and reports predominantly published after 2020. The study revealed diverse definitions and frameworks for AI risks, emphasizing a need for more standardized approaches. Two taxonomies—Causal and Domain—were used to categorize the identified risks, highlighting issues related to AI system safety, socioeconomic impacts, and ethical concerns like privacy and discrimination. The findings offer valuable insights for policymakers, auditors, and researchers, providing a structured foundation for understanding and mitigating AI risks.

The study offers detailed resources, including a website and database, to help you understand and address AI-related risks. It provides a foundation for discussion, research, and policy development without advocating for the importance of specific risks. The AI Risk Database categorizes risks into high-level and mid-level taxonomies, aiding in targeted mitigation efforts. The repository is comprehensive and adaptable, designed to support ongoing research and debate.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.